How To Upload A File In Google Groups

Apply Cases and Different Ways to go Files Into Google Cloud Storage

Including AppEngine with Firebase Resumable Uploads

For this article I will break down down a few different ways to collaborate with Google Cloud Storage (GCS). The GCP docs land the following ways to upload your data: via the UI, via the gsutil CLI tool, or via JSON API in diverse languages. I'll go over a few specific utilize cases and approaches for the ingress options to GCS beneath.

i. upload form on Google App Engine (GAE) using the JSON api

Employ case: public upload portal (small files)

2. upload form with firebase on GAE using the JSON api

Employ example: public upload portal (large files), uploads within mobile app

3. gsutil control line integrated with scripts or schedulers like cron

Utilize case: backups/archive, integration with scripts, migrations

4. S3 / GCP compatible file management programs such every bit Cyberduck

Utilize case: cloud storage management via desktop, migrations

5. Deject role (GCF)

Use case: Integration, changes in buckets, HTTP requests

half-dozen. Cloud console

Use instance: cloud storage management via desktop, migrations

1. App Engine nodejs with JSON API for smaller files

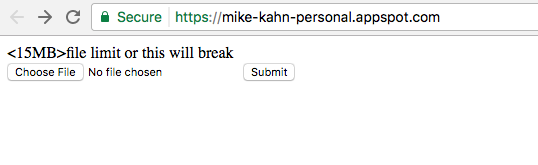

You can launch a pocket-size nodejs app on GAE for accepting smaller files direct to GCS ~20MB pretty easily. I started with the nodejs GCS sample for GAE on the GCP github business relationship hither.

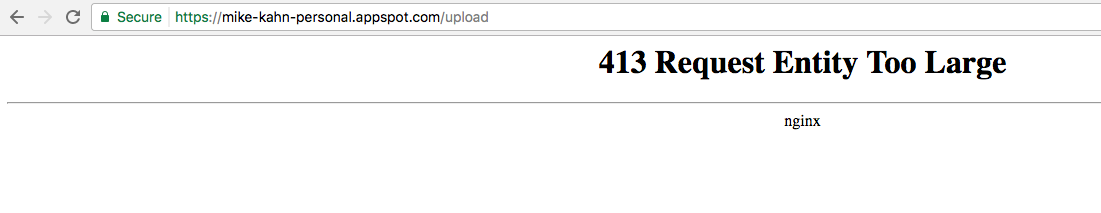

This is a prissy solution for integrating uploads around 20MB. Merely remember the nginx servers behind GAE have a file upload limit. And so if yous try and upload something say around 50MB, you'll receive an nginx error: ☹️

You tin can try and upload the file size limit in the js file but still the web servers behind GAE volition have a limit for file uploads. And then, if you programme to create an upload form on App Engine, be sure to have a file size limitation in your UI.

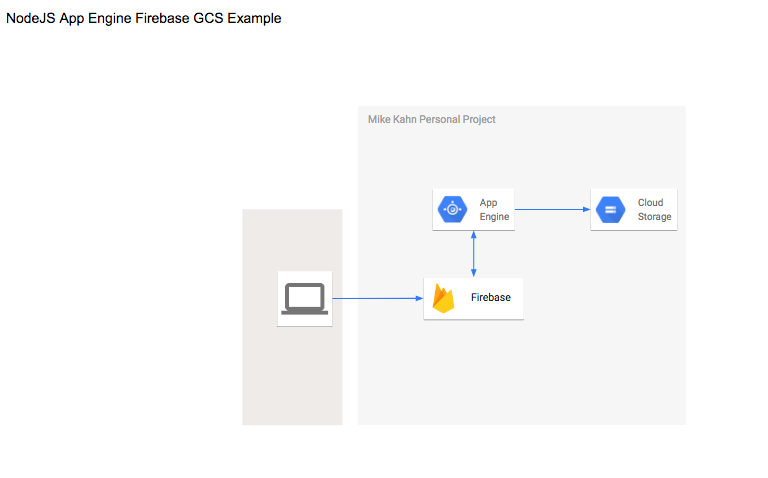

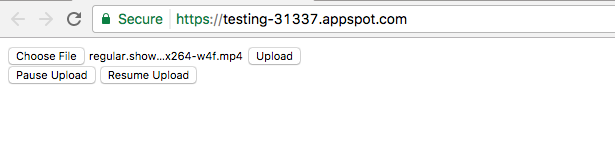

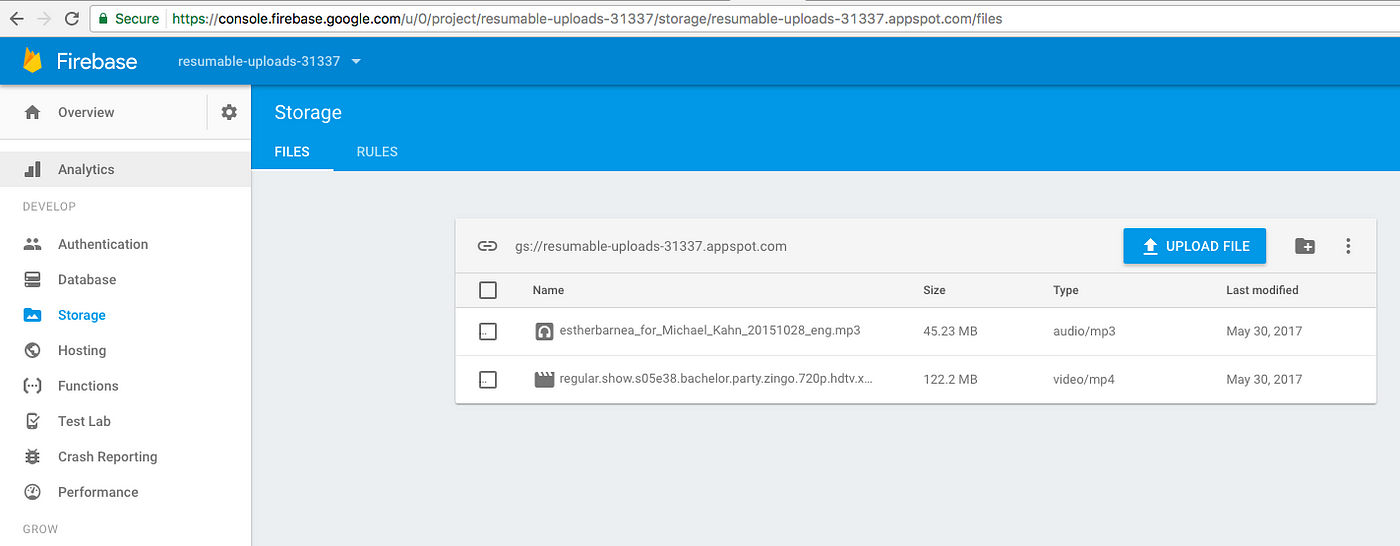

ii. App Engine nodejs Firebase with JSON API and Resumable uploads for large files

Since the previous case simply works for smaller files, I wondered how can we solve for uploading larger files say 100MB or 1GB? I started with the nodejs app engine storage example here.

Later on attempting to use resumable uploads in GCS API with TUS and failing I enlisted aid from my friend Nathan @ world wide web.incline.digital to help with some other approach.

With the help of Nathan we integrated resumable uploads with firebase SDK. Lawmaking tin can exist found here

https://github.com/mkahn5/gcloud-resumable-uploads.

Reference: https://firebase.google.com/docs/storage/spider web/upload-files

While non very elegant with no condition bar or anything fancy this solution does work for uploading large files from the web. 🙌🏻

three. gsutil from local or remote

gsutil makes it easy to re-create files to and from cloud storage buckets

Just make certain you have the google deject sdk on your workstation or remote server (https://cloud.google.com/sdk/downloads), fix project and authenticate and thats it.

mkahnucf@meanstack-3-vm:~$ gsutil ls

gs://artifacts.testing-31337.appspot.com/

gs://staging.testing-31337.appspot.com/

gs://testing-31337-public/

gs://testing-31337.appspot.com/

gs://us.artifacts.testing-31337.appspot.com/

gs://vm-config.testing-31337.appspot.com/

gs://vm-containers.testing-31337.appspot.com/ mkahnucf@meanstack-3-vm:~/nodejs-docs-samples/appengine/storage$ gsutil cp app.js gs://testing-31337-public Copying file://app.js [Content-Type=application/javascript]... / [1 files][ 2.7 KiB/ 2.7 KiB] Operation completed over 1 objects/two.seven KiB.

More details here.

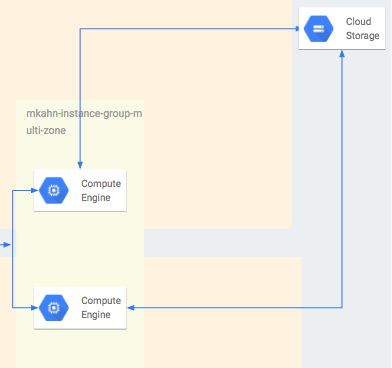

gsutil makes it just easy to automate fill-in of directories, sync changes in directories, backup database dumps, and easily integrate with apps or schedulers for scripted file uploads to GCS.

Below is the rsync cron I take for my cloud storage bucket and the html files on my blog. This way I have consistency betwixt my GCS bucket and my GCE instances if I determine to upload a file via www or via GCS UI.

root@mkahncom-instance-group-multizone-kr5q:~# crontab -fifty */2 * * * * gsutil rsync -r /var/www/html gs://mkahnarchive/mkahncombackup */2 * * * * gsutil rsync -r gs://mkahnarchive/mkahncombackup /var/www/html

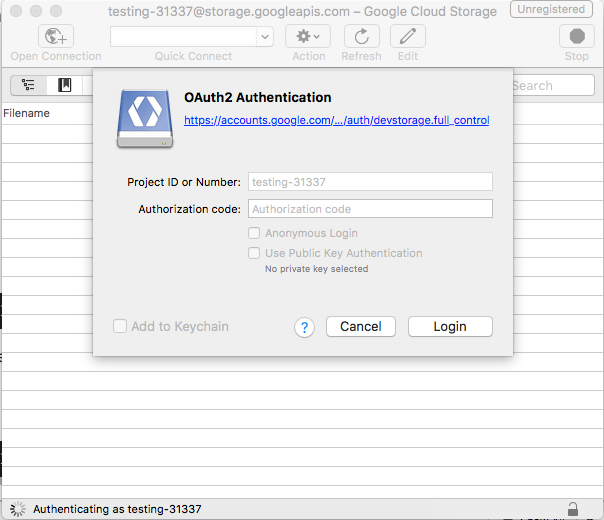

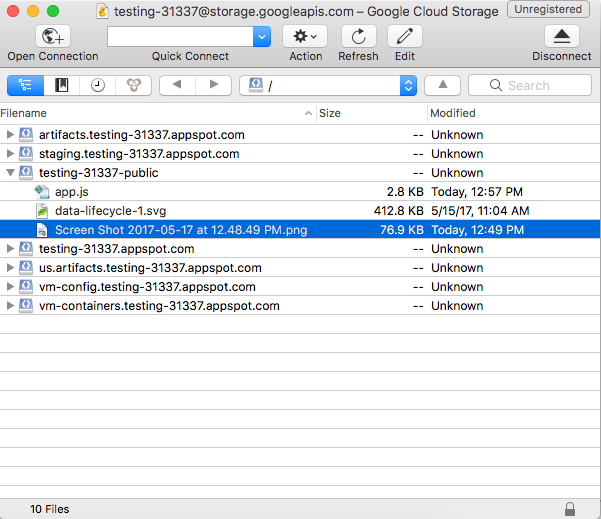

4. Cyberduck (MacOS) or whatever application with an s3 interface

Enjoy an client ftp type feel with Cyberduck on MacOS for GCS.

Cyberduck has very nice oauth integration for connecting to the GCS API built into the interface.

After authenticating with oauth you can browse all of your buckets and upload to them via the cyberduck app. Nice option to take for moving many directories or folders into multiple buckets.

More info on CyberDuck hither.

5. Deject Role

You lot tin also configure a Google Cloud Function (GCF) to upload files to GCS from a remote or local location. This tutorial below is simply for uploading files in a directory to GCS. Run the cloud office and it zips a local directory files and puts the zip into the GCS stage bucket.

Try the tutorial:

https://cloud.google.com/functions/docs/tutorials/storage

Michaels-iMac:gcf_gcs mkahnimac$ gcloud beta functions deploy helloGCS -stage-saucepan mike-kahn-functions -trigger-bucket mikekahn-public-upload

Copying file:///var/folders/kq/5kq2pt090nx3ghp667nwygz80000gn/T/tmp6PXJmJ/fun.zip [Content-Type=application/aught]…

- [1 files][ 634.0 B/ 634.0 B]

Operation completed over ane objects/634.0 B.

Deploying role (may take a while — up to 2 minutes)…done.

availableMemoryMb: 256

entryPoint: helloGCS

eventTrigger:

eventType: providers/cloud.storage/eventTypes/object.change

resources: projects/_/buckets/mikekahn-public-upload

latestOperation: operations/bWlrZS1rYWhuLXBlcnNvbmFsL3VzLWNlbnRyYWwxL2hlbGxvR0NTL1VFNmhlY1RZQV9j

name: projects/mike-kahn-personal/locations/us-central1/functions/helloGCS

serviceAccount: mike-kahn-personal@appspot.gserviceaccount.com

sourceArchiveUrl: gs://mike-kahn-functions/us-central1-helloGCS-wghzlmkeemix.zip

status: READY

timeout: 60s

updateTime: '2017–05–31T03:08:05Z' You can also utilize deject functions created to display saucepan logs. Below shows a file uploaded via my public upload grade and deleted via the console ui. This could be handy for pub/sub notifications or for reporting.

Michaels-iMac:gcf_gcs mkahnimac$ gcloud beta functions logs read helloGCS LEVEL Proper name EXECUTION_ID TIME_UTC LOG D helloGCS 127516914299587 2017-05-31 03:46:19.412 Function execution started

I helloGCS 127516914299587 2017-05-31 03:46:19.502 File FLIGHTS BANGKOK.xlsx metadata updated.

D helloGCS 127516914299587 2017-05-31 03:46:19.523 Part execution took 113 ms, finished with status: 'ok'

D helloGCS 127581619801475 2017-05-31 xviii:31:00.156 Office execution started

I helloGCS 127581619801475 2017-05-31 18:31:00.379 File FLIGHTS BANGKOK.xlsx deleted.

D helloGCS 127581619801475 2017-05-31 18:31:00.478 Function execution took 323 ms, finished with status: 'ok'

Deject Functions tin come in handy for groundwork tasks similar regular maintenance from events on your GCP infrastructure or from action on HTTP applications. Check out the how-to guides for writing and deploying cloud functions here.

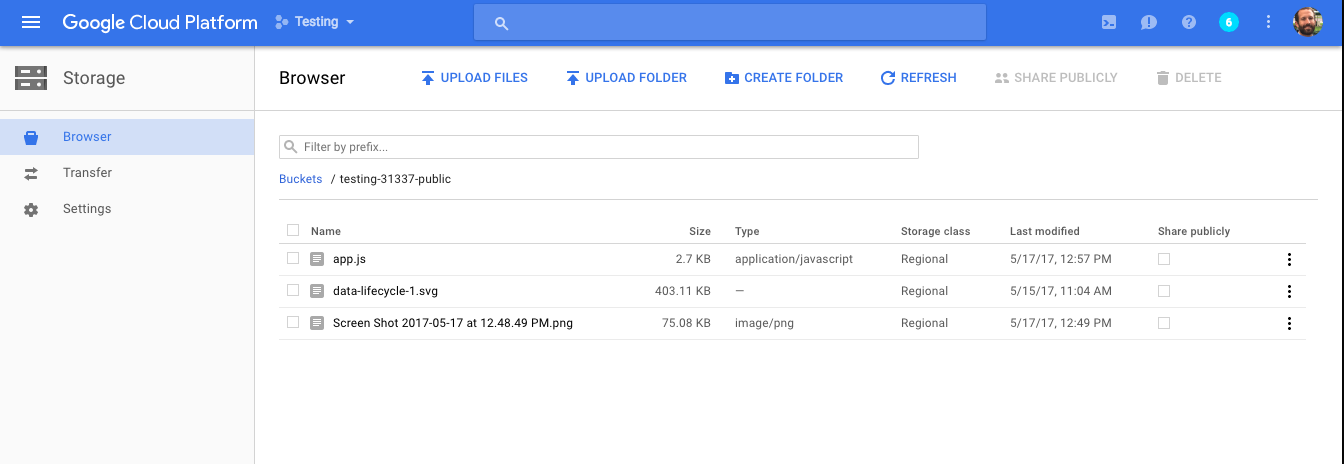

half-dozen. Cloud Console UI

The UI works well for GCS administration. GCP even has a transfer service for files on S3 buckets on AWS or other s3 buckets elsewhere. 1 affair that is defective in the portal currently would be object lifecycle management. This is prissy for automated archiving to coldline cheaper object storage for infrequently accessed files or files over a certain age in buckets. For at present you can only modify object lifecycle via gsutil or via API. Like most GCP features they first at the role/API level then make their fashion into that portal (the way it should exist IMO) and I'm fine with that. I wait object lifecycle rules to be implemented into the GCP portal at some betoken in the future. 😃

In summary I've used a few GCP samples and tutorials that are available to display to different ways to become files onto GCS. GCS is flexible with many ingress options that can be integrated into systems or applications quite easily! In 2017 the use cases for object storage are arable and GCP makes information technology easy to send and receive files in GCS.

Leave a comment for whatsoever interesting use cases for GCS that I may have missed or that we should explore. Thanks!

Cheque my blog for more updates.

Source: https://medium.com/google-cloud/use-cases-and-a-few-different-ways-to-get-files-into-google-cloud-storage-c8dce8f4f25a

Posted by: dumontaftes1955.blogspot.com

0 Response to "How To Upload A File In Google Groups"

Post a Comment